About

Inferencer allows you to download, run and deeply control the latest SOTA AI models (GPT-OSS, DeepSeek, GLM, Kimi, Qwen and more) on your own computer.

No data is sent to the cloud for processing - maintaining your complete privacy.

Advanced inferencing controls give you complete control on their accuracy and outputs.

All AI processing happens on your device.

No telemetry, no background "update" checks.

Models

Start in the models section where you can select the location of existing models or download new ones directly from Hugging Face.Chats

Select the model to interact with on the top menu bar and write a prompt to begin. At any point you can switch between models and continue the chat to see what else they can uncover. You can also selectively delete past messages to keep the model focused and less scatterbrain.Chat Controls

Control the inferencing parameters including batching to inference multiple chats at the same time, intensity of processing, and model streaming to load models larger than available memory.Token Inspection

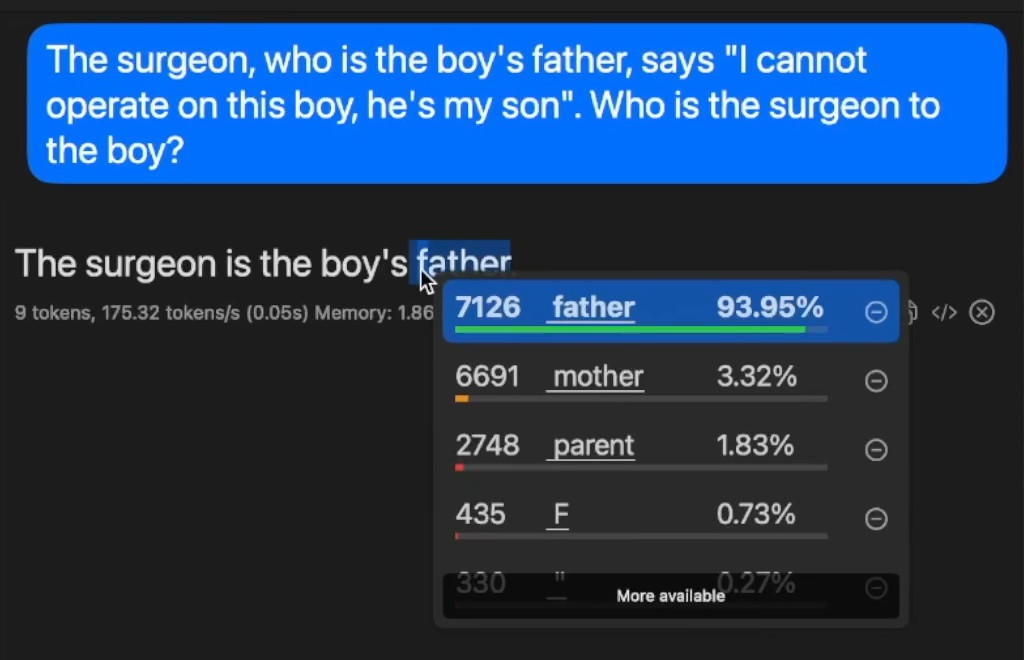

Select the inspector to peek into the inner-workings of each word outputted and see the model's confidence levels and alternative choices.Response Control

Expand the prompt section to utilise the prefill feature which allows you to control the output the model generates. For example, skip the preamble and direct the model to output in structured html by starting the response with a <html> tag.Tools

The tools editor allows you to enable built in tools such as get_webpage_content or add in your own, so that the models can use them when needed. For example, if you'd like a webpage or search result inferenced, simply enable the tool in the Tools section, and allow tool calls in the chat settings panel.Server

If enabled, the server feature allows you to serve and connect to your own or trusted devices. No data is sent elsewhere. Also includes compatible APIs for application development.Prompt Caching

Significantly improves prompt processing by prefix-matching previously processed prompts. Uses a user-configurable cache pool with optional external storage and automatic LRU (least recently used) eviction (enabled in Settings).Note: To extend the lifetime of a cache, use this feature in conjunction with Set Date in Inference Settings or Fixed Date in Server Settings to avoid cache reprocessing when a new system date is detected.

Distributed Inference

With distributed compute you can now link together two Macs, sharing the memory to inference larger models. To use make sure it's enabled in both the app and server settings. Once a connection to your server is made, if both the computers have the same model, a distributed compute icon will appear next to the models dropdown list. Simply tap on it to load the model for distributed compute.Coding Tools

Built-in support for Xcode Intelligence and Visual Studio Code, including support for distributed compute and internet-hosted deployments. To setup, use the server feature with Compatibility APIs enabled and SSL disabled to allow Xcode or Visual Studio Code to use Inferencer as a service provider.Shortcuts

Use the Shortcuts app to automate inferencing workflows (e.g., copy text from clipboard > inference > speak result).Settings

Including parental controls, setting up an automatic deletion policy and configuring font sizes.Security

Inferencer conforms to strict, kernel-level operating system enforced application sandboxing.Privacy

By default, All AI processing happens offline and on your device. No data is sent to the cloud for maximum privacy.Subscribe for updates

With more features coming soon, you can be the first to know.