Artificial intelligence

should not be a black box.

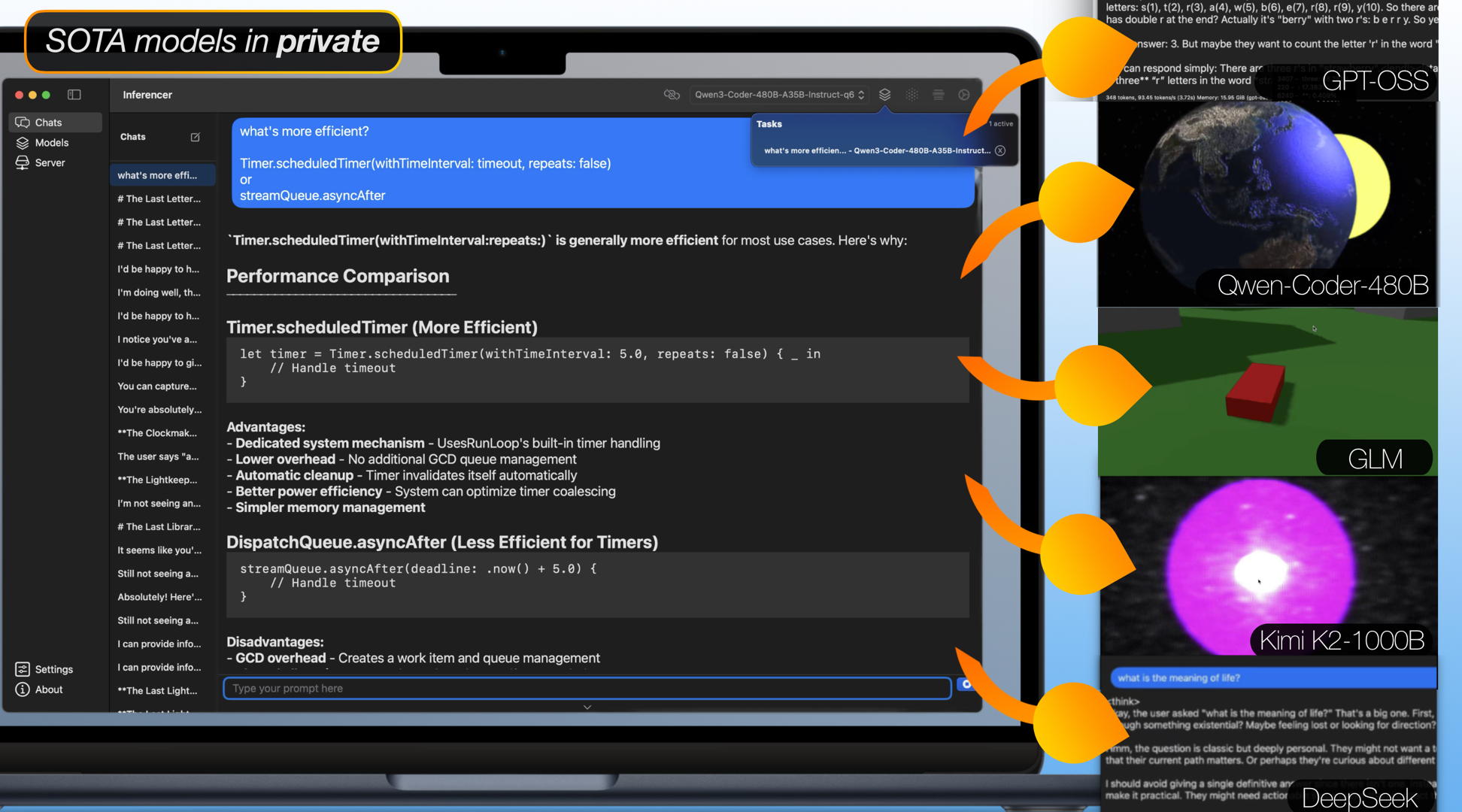

Private

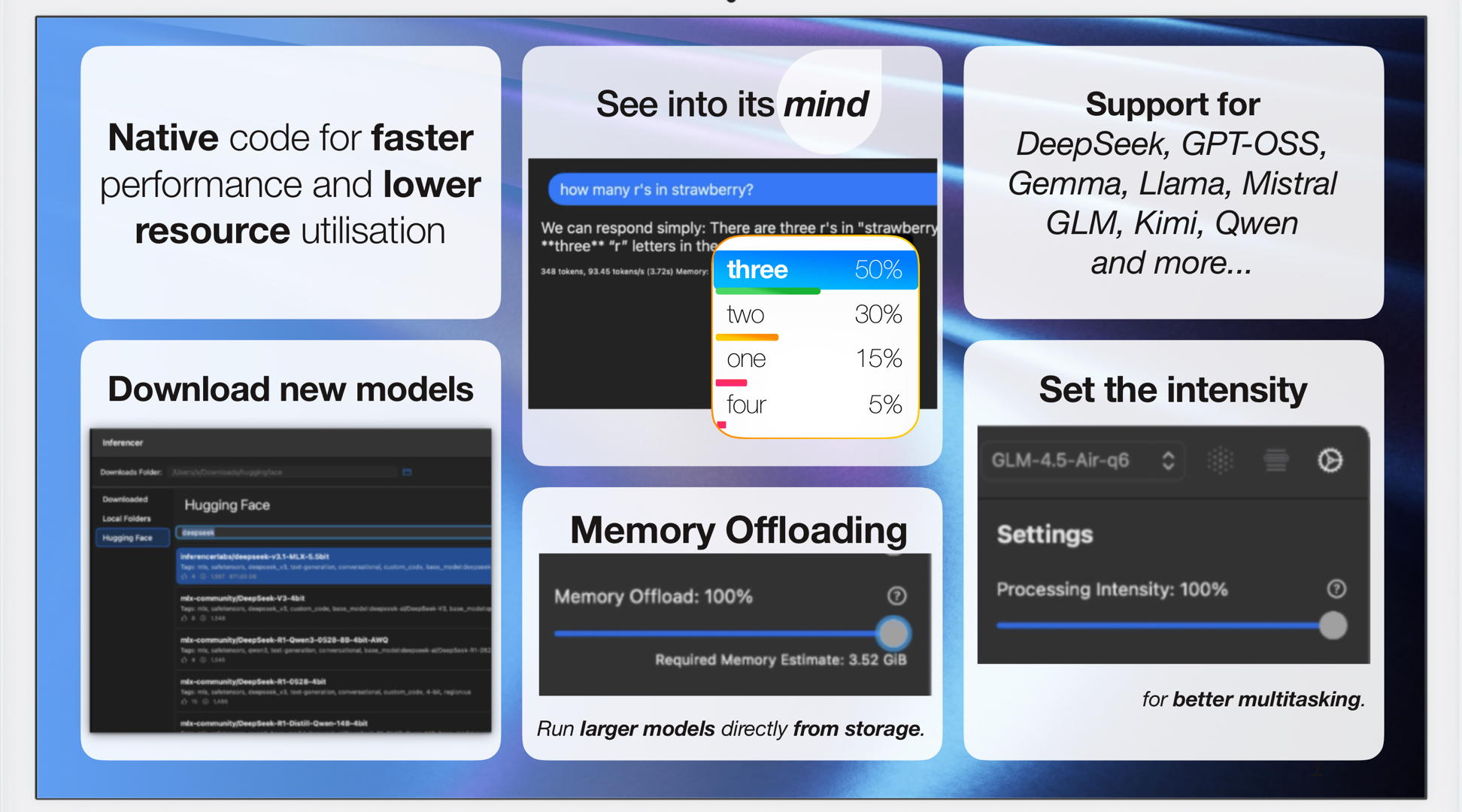

By default, all AI processing happens offline and on your device. No data is sent to the cloud for processing.

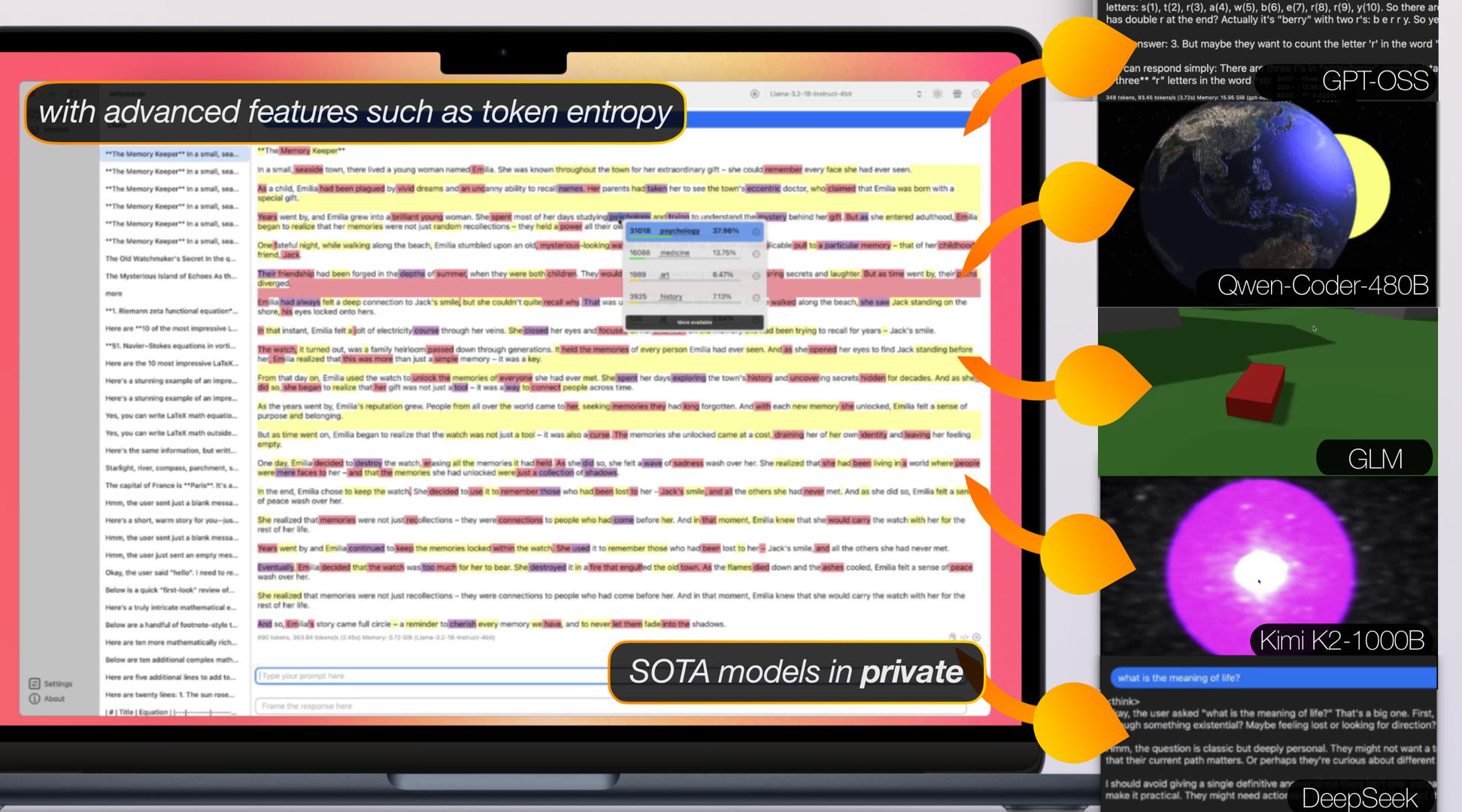

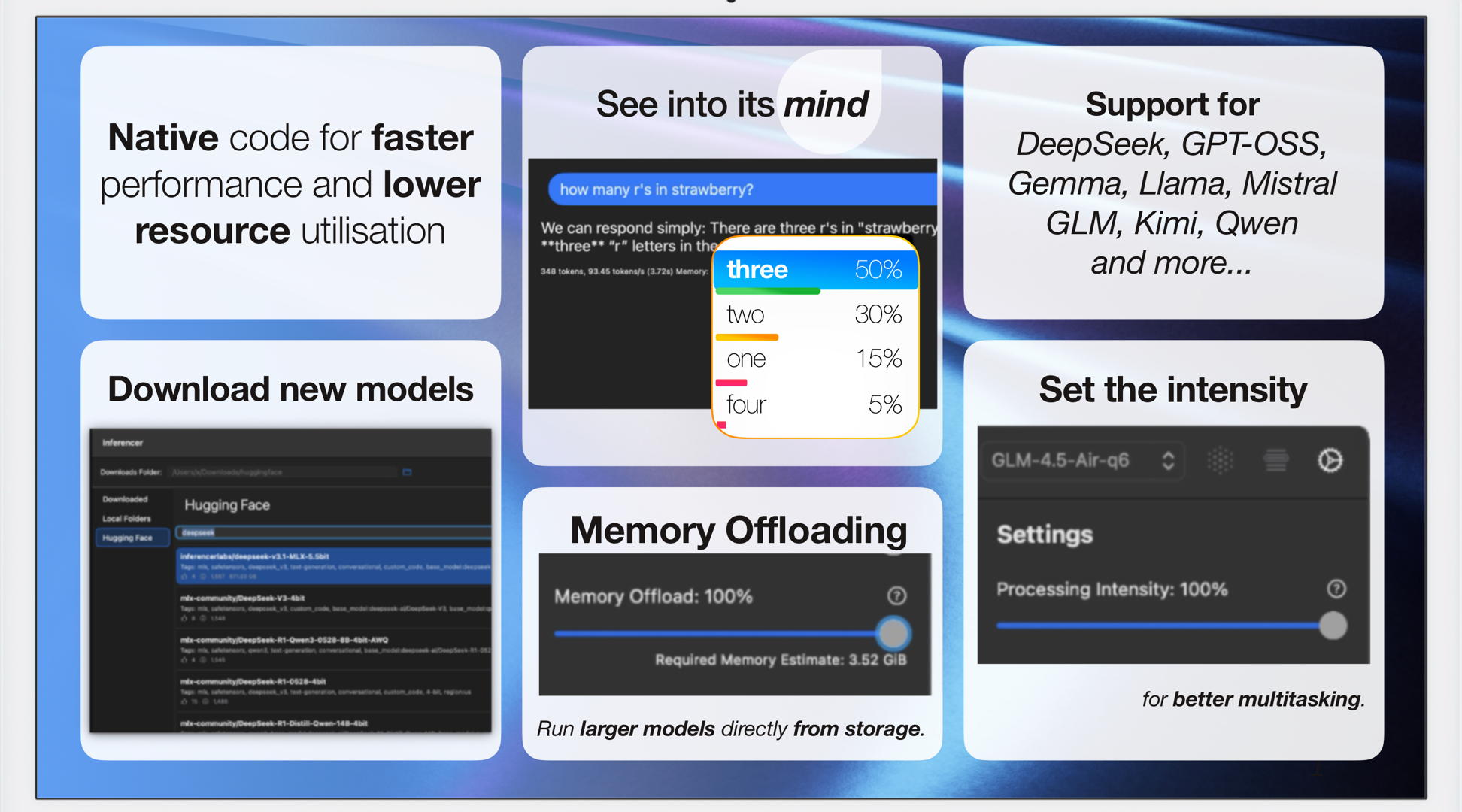

State-of-the-Art

Support for SOTA models. Fastest inferencing performance. Patent Pending deep learning inferencing to fully control your models.

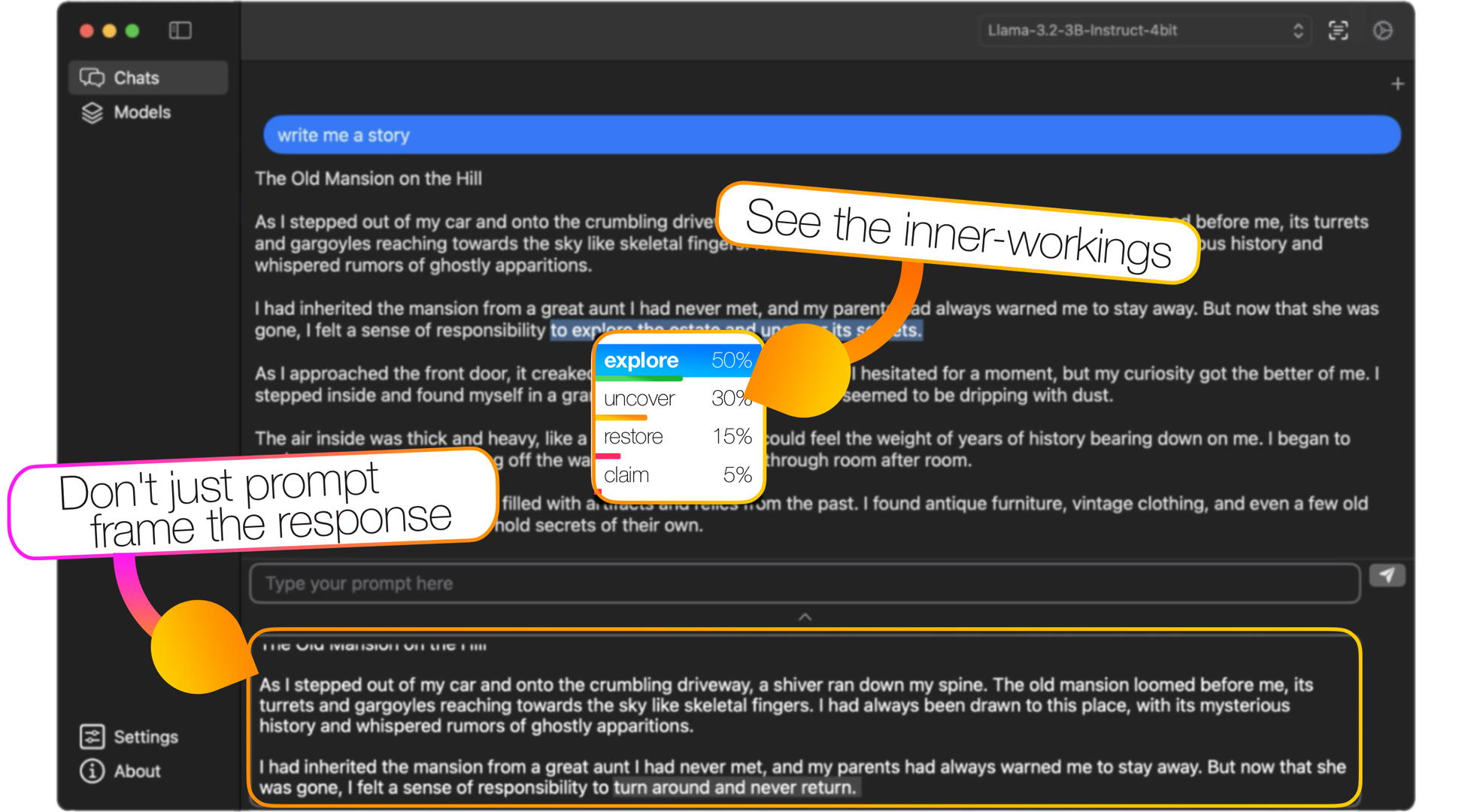

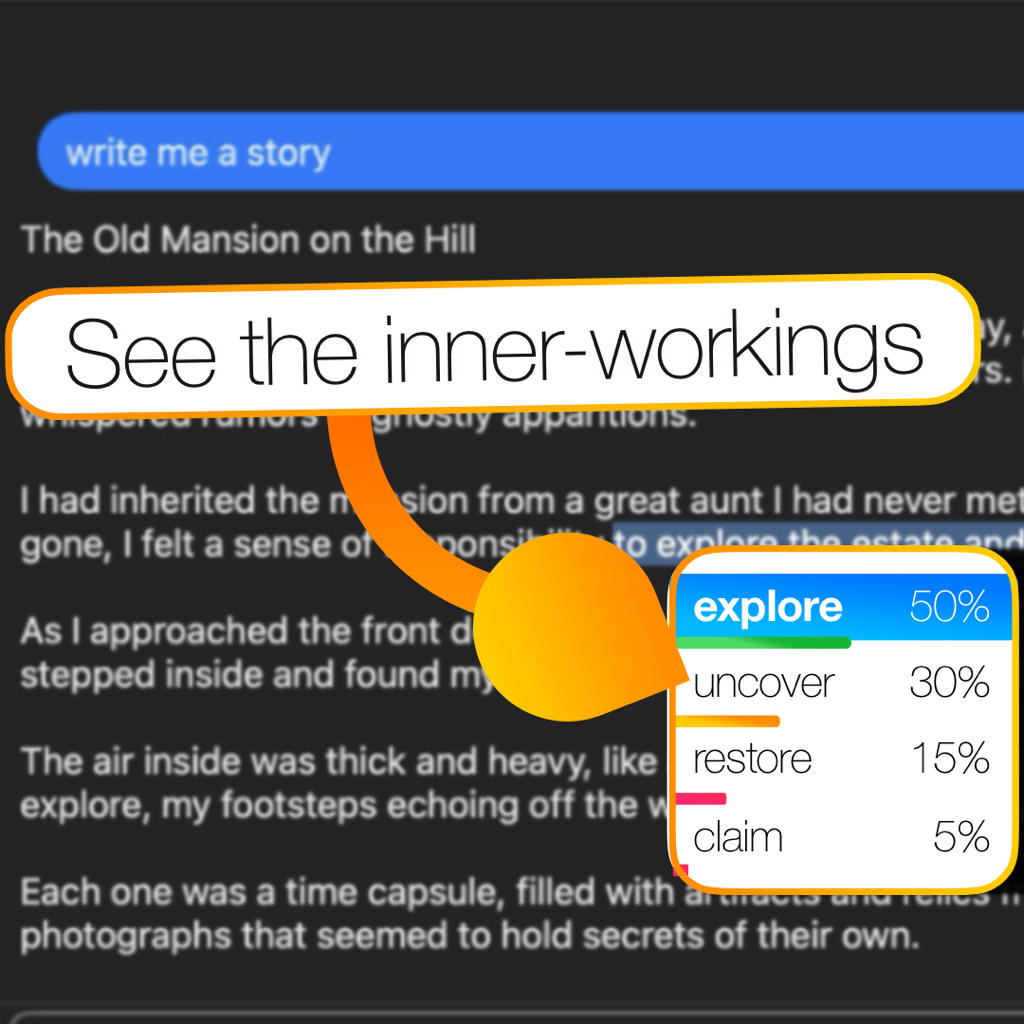

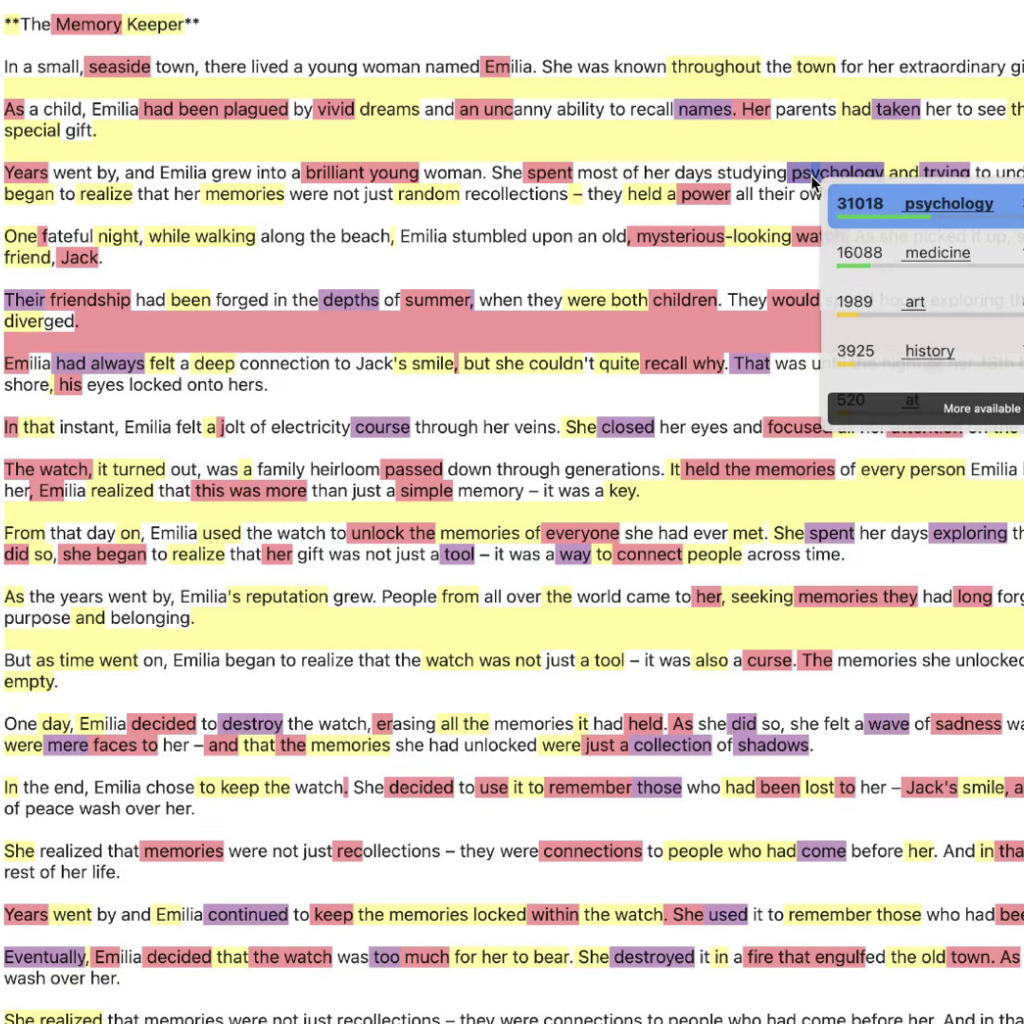

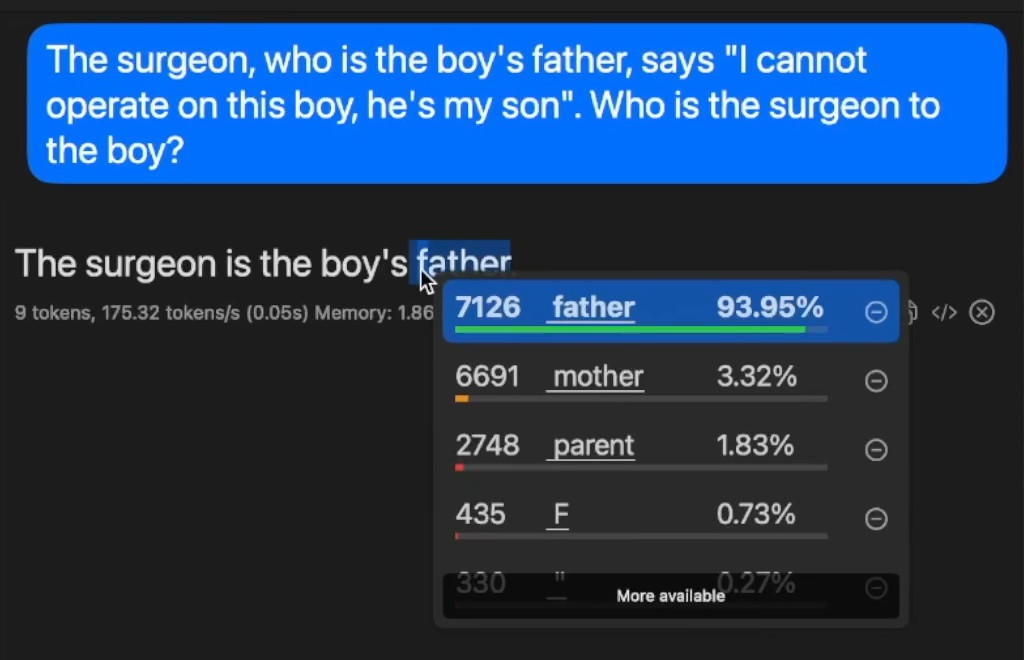

Token Inspection

Tap on the token inspector icon to reveal the probabilities for each token, showing you exactly what the model was thinking at each step.

Token Entropy: Instantly see contentious tokens, allowing you to better gaguge the confidence of the generation.

Token Selection: Specifically select tokens to manually explore alternative branches.

Token Exclusion: Select tokens to exclude from the generation, such as foreign characters.

Private Server

Serve and inference models over the local network or internet with SSL encryption. Keeping the privacy of your inference in your premises.

Compatible APIs: Also includes Ollama and OpenAI compatible APIs for application development.

Distributed Inference: Link two computers together to run even larger models together.

Expert Control

Unlock faster inference or increased intelligence by controlling the number of experts used in Mixture of Experts (MoE) models.

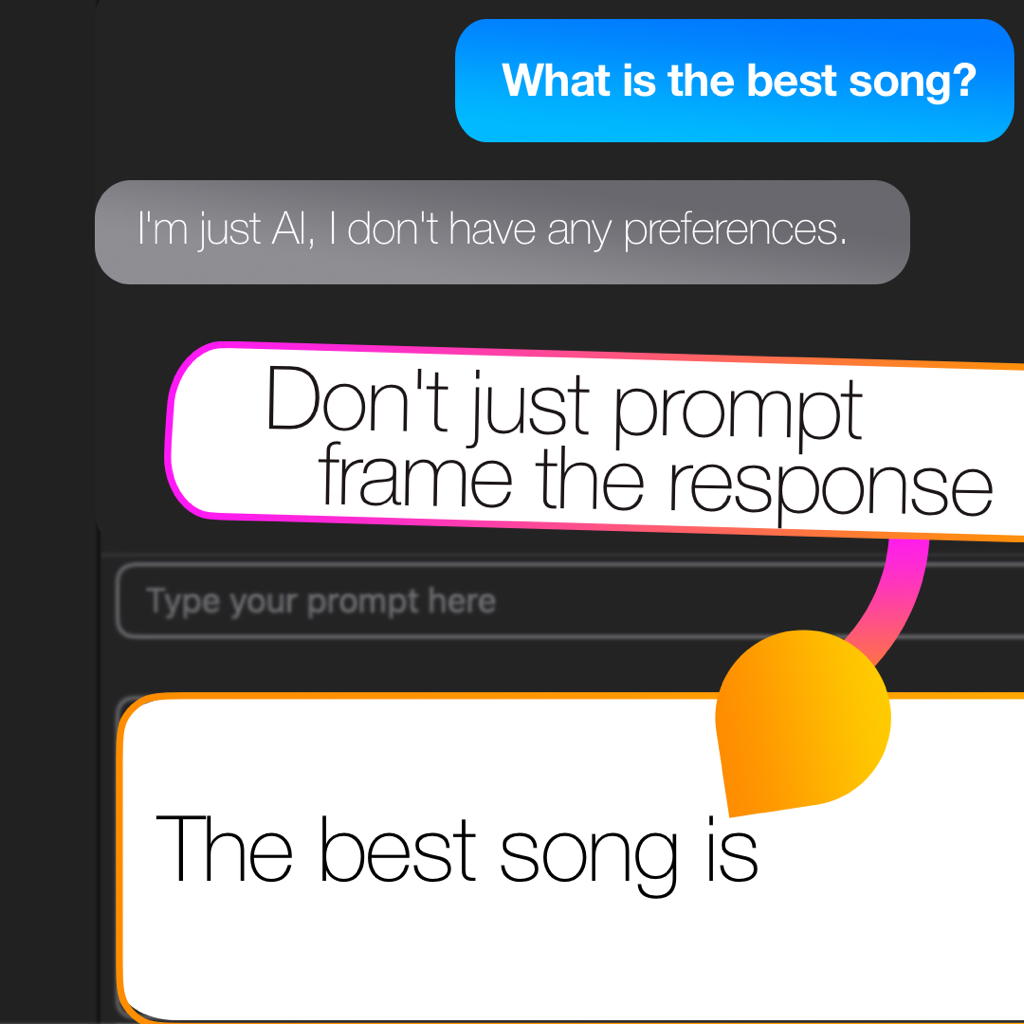

Prompt Prefilling

Expand the prompt field to seed a model's response by prefilling the Assistant message.

This technique allows you to direct its actions, skip preambles, enforce specific formats like JSON or XML, and even unlock gated responses.

Custom Tool Calls

The tools editor allows you to enable built in tools such as get_webpage_content or add in your own, so that the inferenced models can use them when needed.

More Features

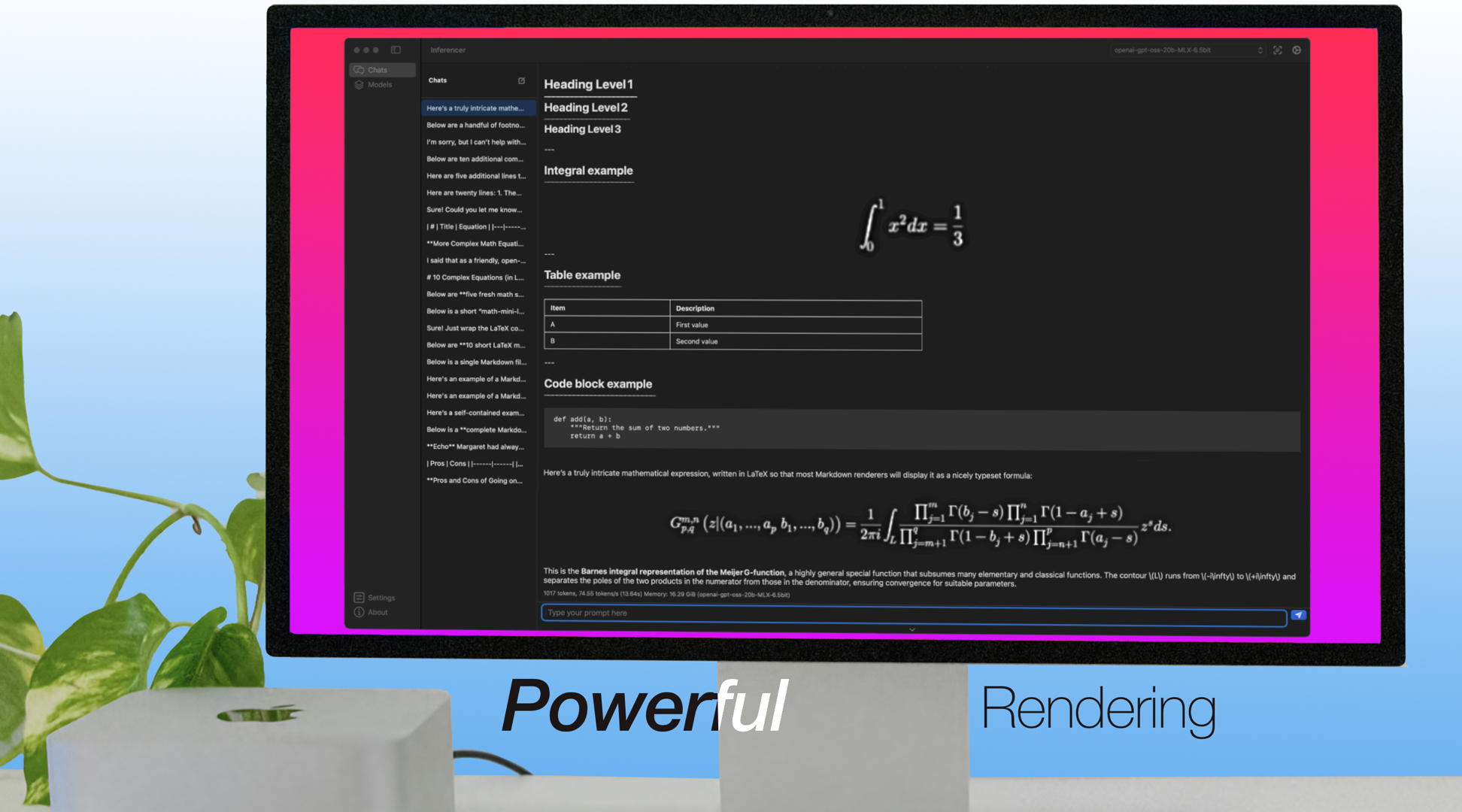

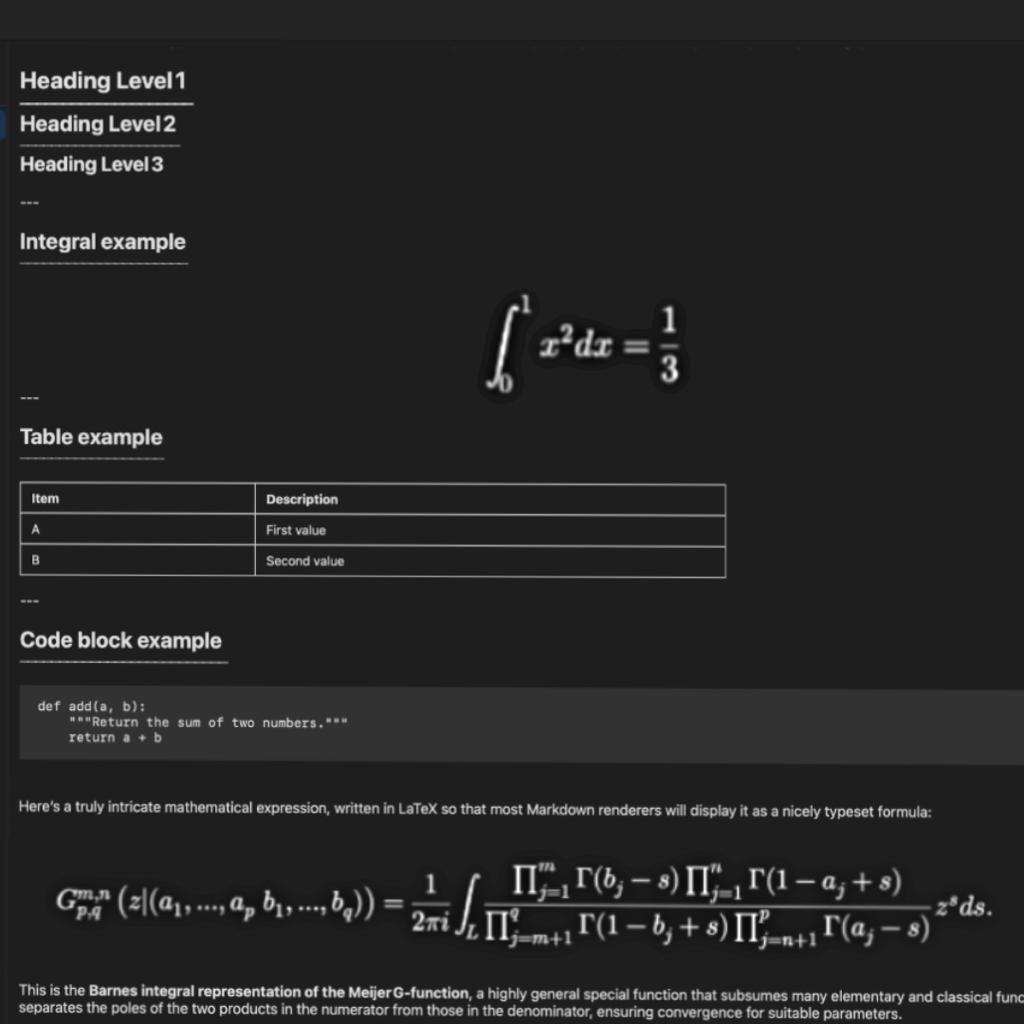

Rendering: Support for markdown with advanced LaTeX rendering. Code previews also coming soon.

Model Streaming: Stream large models directly from storage, using custom read-only implementation for low-resource devices.

Batching: Continuously combines multiple requests to the same model into a single forward pass, dramatically improving total throughput.

Understand

why.

Pricing

Base

✓ Unlimited processing

✓ Markdown rendering

✓ Model control settings

✓ Download models

‒ Limited inference server

‒ Limited model streaming

‒ Limited distributed compute

‒ Limited batching

✓ Auto-load/unload settings

✓ Retention control settings

✓ Parental controls

‒ Limited custom tools

‒ Limited prompt prefilling

‒ Limited token entropy

‒ Limited token exclusions

‒ Limited token probabilities

‒ Limited expert control

‒ Limited Shortcuts

✓ Xcode Intelligence

✓ Visual Studio Code

Professional

✓ Unlimited processing

✓ Markdown rendering

✓ Model control settings

✓ Download models

✓ Encrypted inference server

✓ Model streaming

✓ Distributed compute

✓ Multi-generation batching

✓ Auto-load/unload settings

✓ Retention control settings

✓ Parental controls

✓ Unlimited custom tools

✓ Unlimited prompt prefilling

✓ Unlimited token entropy

✓ Unlimited token exclusions

✓ Unlimited token probabilities

✓ Mixture of experts control

✓ Shortcuts integration

✓ Xcode Intelligence

✓ Visual Studio Code

✓ Support the development

Subscribe for updates

With more features coming soon, you can be the first to know.